ISS Utilization: METERON (International Space Station: Multi-Purpose End-To-End Robotic Operation Network)

Human Spaceflight

ISS: METERON (International Space Station Utilization: Multi-Purpose End-To-End Robotic Operation Network) / Telerobotics & Haptics

METERON is a suite of experiments to validate advanced technologies for telerobotics from space, using the ISS. METERON is an ESA-led technology demonstration involving the ISS (International Space Station). The project is targeted at validating autonomous and real-time telerobotic operations from space to ground. It is targeted to provide answers to important questions regarding required technologies for future space exploration scenarios. To test various scenarios and to validate the related technologies, robots on Earth will be controlled from the interior of the ISS with haptic feedback and high situational awareness. Control devices used in space, will be force reflecting joysticks and arm exoskeletons. 1)

Note: “Haptics” is any form of interaction involving touch (from Greek : haptikos, from haptesthai; to grasp, touch). Haptic technology is a technique that interfaces with the user through the sense of touch. Haptics is the science of applying touch (tactile) sensation and control to interaction with computer applications.

Primary Robotics Goals of METERON

• Technology Validation (advanced mechatronics & wearable haptic devices)

• Telepresence in space (-µG) environment (> 20 ms to supervised autonomy)

• Human-Robot Interaction in highly constrained environments

• Inter-operability of groups of robots and control devices

METERON is being carried out in partnership with DLR (Institute of Robotics & Mechatronics), NASA [Johnson Space Center, AMES Research Center, JPL (Jet Propulsion Laboratory)] and Roscosmos with Russian partners (RTC Institute St. Petersburg, Energia).

The ESA/ESTEC Telerobotics Laboratory is responsible for the definition, implementation and conducting of the telerobotics experiments and in particular for the development of the haptic devices, the controllers and the full on-board control station for ISS.

The ESA Telerobotics & Haptics Laboratory is an engineering research laboratory that performs fundamental research in the domains of telerobotics, mechatronics, haptics and human-robot interaction. The Laboratory output is targeted at supporting novel spaceflight projects by pre-development and demonstration of critical technologies. - The research areas of the laboratory are in (1) the design of human-centric mechatronic haptic devices, in (2) control for distributed telerobotic systems, in (3) the optimization of perceptive feedback for humans, and in (4) the design and development of advanced computational frameworks, tools and APIs (Application Programming Interfaces) to support general telerobotics and mechatronics research.

METERON is a suite of experiments initiated by ESA and its partners. Its main goal is to explore different space telerobotics concepts, using a variety of communication links with different latencies and bandwidth specifications. 2)

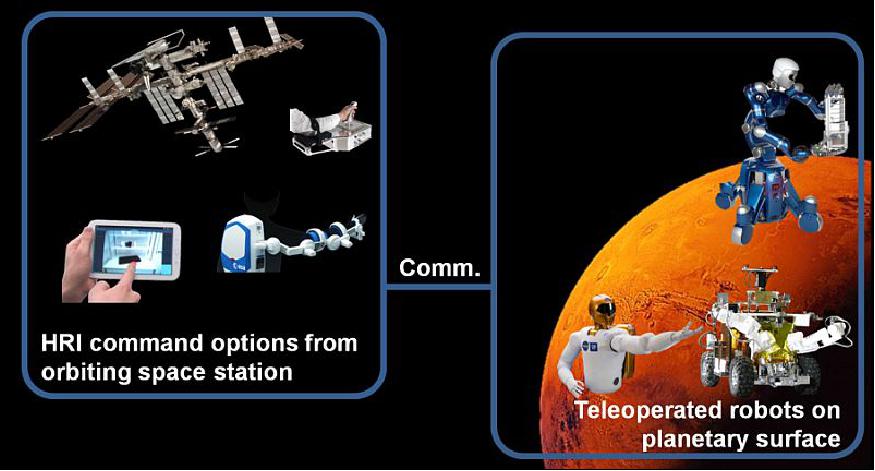

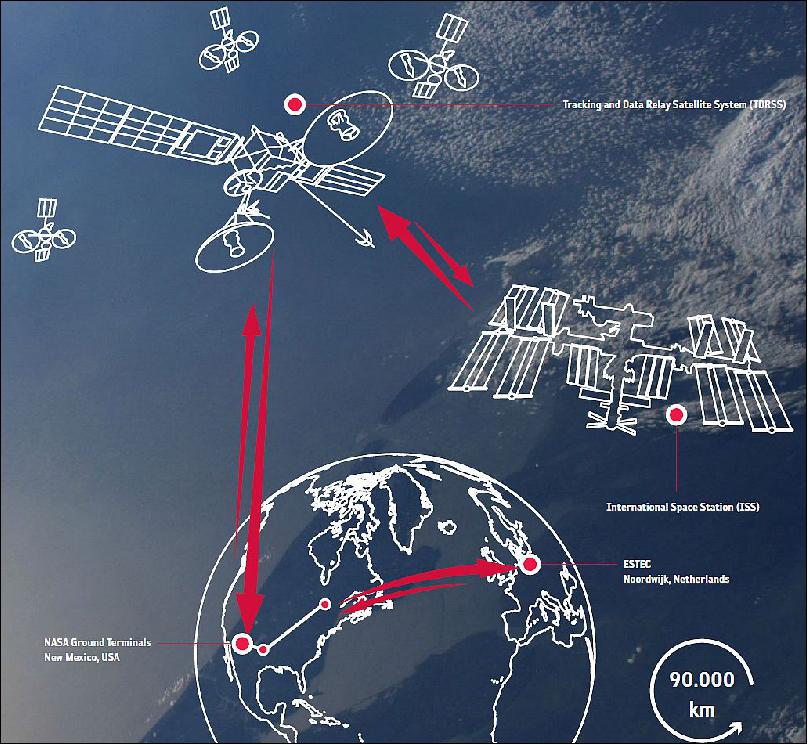

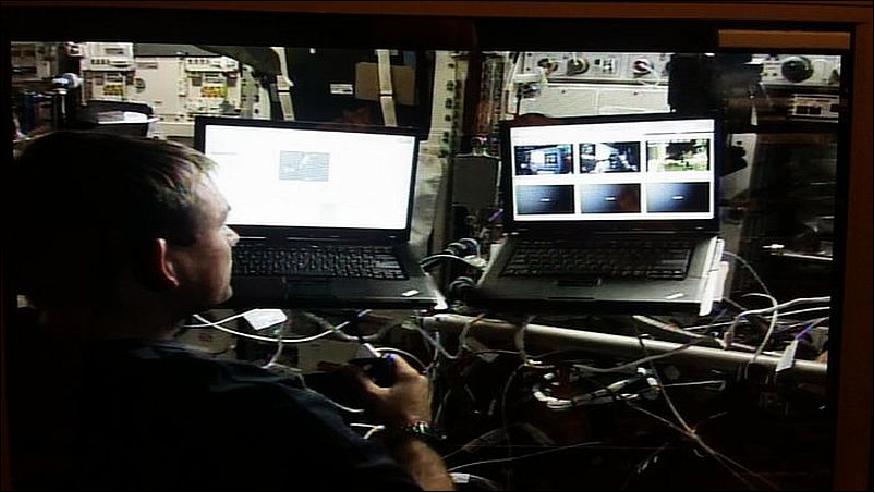

Teleoperating the robot for extraterrestrial exploration allows the operator to remain at a safe distance from the humanly unreachable, and dangerous environment. For the METERON experiments, rovers and service robots will be deployed on Earth, in different simulated space and planetary scenarios to perform a catalog of tasks. The target robot will be commanded from the International Space Station (ISS), through the use of different HRI (Human Robot Interface) options, ranging from laptop and tablet PCs, to force reflection joystick, and exoskeleton. Figure 1 gives a concept overview of the METERON experiment suite.

Two Forms of Telerobotic Commands will be Studied in the METERON Experiment Suite

1) Full haptic feedback telepresence, which requires low latency communication links coupled with haptic capable robots and HRI.

2) Supervised autonomy, which uses high-level abstract commands to communicate complex tasks to the robot. This style of telerobotics depends on the local intelligence of the robot to carry out decision making and processing on site. Supervised autonomy can significantly reduce the workload, and improve task success rate of the human-robot team, and tolerate significantly higher communication latencies, where multi-second delays can be coped with.

Meteron supports the implementation of a Space Internet, examines the benefits of controlling surface robots in real time from an orbiting spacecraft, and investigates how best to explore a planet through a partnership between humans and robots. This is of importance to planning future human exploration missions to Mars, for example, and feeds into Earth-based technologies such as in medicine or handling of radioactive material.

Controlling Robots Across Oceans and Space – no Magic Required

• October 2, 2019: This Autumn is seeing a number of experiments controlling robots from afar, with ESA astronaut Luca Parmitano directing a robot in The Netherlands and engineers in Germany controlling a rover in Canada. 3)

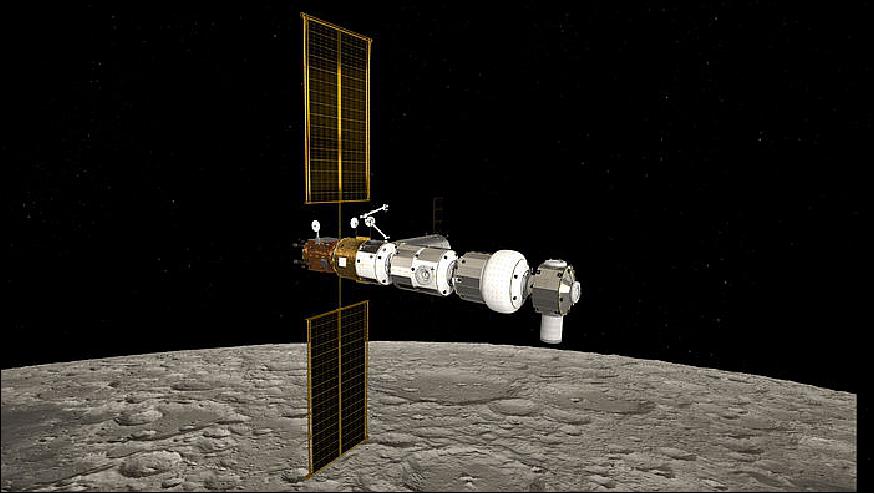

Imagine looking down at the Moon from the Gateway as you prepare to land near a lunar base to run experiments, but you know the base needs maintenance work on the life-support system that will take days. It would be better to maintain the base from orbit so the astronauts can get straight to work once on the Moon.

Human-robotic partnerships are at the heart of ESA’s exploration strategy, which includes preparing for scenarios like this by sending robotic scouts to the Moon and planets, hand-in-hand with astronauts controlling them from orbit.

The Meteron project was formed to develop the technology and know-how needed to operate rovers in these harsh conditions. It covers all aspects of operations, from communications and the user interface to surface operations and even connecting the robots to the astronauts by sense of touch.

Haptics Laboratory

The objective of the Haptics Laboratory is to study the task capability for a dexterous humanoid robot on a planetary surface, as commanded from a space station (e.g. ISS) in orbit, using only a single tablet PC. A Dell Latitude 10 tablet PC running Windows 8 has been upmassed with ESA’s ATV-5 in July 2014 to the ISS for the METERON Haptics-1 experiment . A new GUI (Graphic User Interface) software will be uploaded to this tablet PC for Supvis-Justin (Ref. 2).

The intended robotic tasks include survey, navigation, inspection, and maintenance. These tasks will be performed on a solar farm located on a simulated extraterrestrial planet surface. Using a communication link between the ISS and the Earth, with delays of up to 10 seconds, realtime telepresence would not be possible. Instead, in Supvis-Justin, abstract task-level commands are locally processed and executed by the robot. The robot works together with the tablet PC operator on the ISS in the fashion of a co-worker, rather than a remote electromechanical extension of the astronaut. Furthermore, previous studies have shown that by delegating to the robot through task-space commands, the operator’s workload is significantly reduced.

Haptics-1 Experiment

Haptics-1, part of METERON, is the first of a series of in-flight experiments to demonstrate advanced teleoperation technologies and to demonstrate the telecontrol with realtime force-feedback of robotics on Earth from Space.

Launch

On July 29, 2014, a Haptics device was flown to the ISS in a pouch, being a small payload on the ATV-5 (Automated Transfer Vehicle) of ESA onboard an Ariane 5 ES launcher from Europe's Spaceport in Kourou, delivering 6.6 tons of supplies. 4)

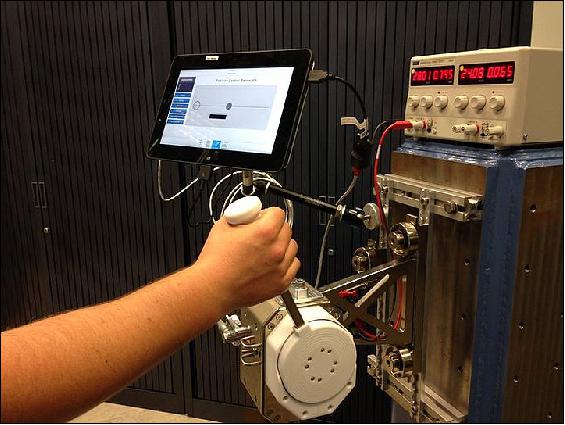

Haptics-1 is a single degree of freedom force reflective joystick (the 1-DOF Setup). The joystick is high performance with active joint impedance control, enabled through a custom torque sensor at the joint output and driven by a highly power-dense RoboDrive ILM brushless motor.

The joystick run's internally on a 2 kHz sample rate in hard realtime and can communicate with external systems at those rates all data that is acquired internally (handle position, velocity, torque, current, etc.). Internally, the joystick uses an Intel Atom Z530 (1.6 GHz) embedded computer that communicates with the joint motor controller via a realtime EtherCAT bus. The joystick is fully self-powered and only needs 28 V input from the ISS and a mechanical interface on the stations seat tracks.

The output on the handle-bar can generate any position or torque to the user in a ripple-free way. As such, the joystick can render torque, stiffness and damping easily and any combination of control mode can be used at any instant in time for the various experiments. The pouch also houses a flight qualified Tablet computer (Dell Latitude 10) that run's the Haptics-1 GUI (Graphical User Interface). The GUI is the sole entry point for the astronaut crew to load and execute experiments with the joystick.

In the first flight experiments of Haptics-1, an extensive set of physiological data is collected that will help to determine design requirements for the design of haptic devices to be used in Space in the future. Up to now, such data is still not available ! We suspect that in microgravity, the perception thresholds will change! Moreover, we are testing which way the joystick can be used in a better fashion, whether wall-mounted (as shown in Figure 3) or in body-mounted configuration, when the joystick is directly 'strapped' onto the crew's chest.. then providing a body-grounded force feedback.

In seven distinct scientific conduct protocols, the project will measure the crew human factor data after exposure to microgravity for an extended duration. The measurements include, for instance, the bandwidth of human motion in position and force control tasks, the minimum stimulus thresholds for sensing torque and stiffness, the mass and stiffness of the upper extremity, and other interesting measures that will define clearly how humans will interact naturally with force-feedback systems when exposed to the microgravity environment in space. This data will help the project to design the ISS payload exoskeleton that will go up hopefully by mid 2016.

Haptics-1 is a preparation for the Haptics-2 experiment, which is the next experiment the project already started working on. Haptics-2, in 2015 will make use the 1-DOF Setup to teleoperate a 1-DOF Setup on Earth, from Space. The project plans to make use of a low-latency direct S-band link between Space and Ground and mark the first real teleoperation experiment conducted from Space - ever! The first haptic interaction in space will already take place this year, under Haptics-1, in October 2014 with ESA Astronaut Alexander Gerst. Alexander will be the first to install the Haptics-1 equipment on the ISS and perform a full set of the seven science protocols.

The Haptics-1 experiment (Figure 4) comes down to a deceptively simple-looking lever that can be moved freely to play simple Pong-style computer games. Behind the scenes, a complex suite of servomotors can withstand any force an astronaut operator might unleash on it, while also generating forces that the astronaut will feel in turn, just like a standard video gaming joystick as a player encounters an in-game obstacle. 5)

Haptics-2 Experiment

The Haptics devices, on ISS and in the Telerobotics Lab of ESA, are identical twins.

In the Haptics-2 experiment, the project is testing a series of protocol combinations with beyond state-of-the-art technology. On June 3, 2015, the project will conduct a first comparison and first check of providing such force-feedback control to a system that spans the Earth and Space!

Two routes are available to send signals back- and forth between the ISS and the test setup at the ESA Telerobotics and Haptics Laboratory. The first route is composed by a signalling system that relays data via a geo-synchronous satellite constellation (TDRS), the NASA Ku-Fwd system.

The second is a low-latency direct S-band link provided by our Russian partners through S-band hardware installed on the Russian module of the ISS. The signal is then received by a German ground station of the DLR (German Aerospace Agency). While this link is direct and features little transmission delay, it has only very limited bandwidth. The first link, enables large bandwidth, but also requires much longer signal travelling times.

First, the project will be testing the more challenging link, the new NASA Ku-Forward system. It should allow to transmit signals between the sites in less then 0.8 seconds. However, the realtime control with force-feedback over so much delay is a very hard thing to do! So, one question, that the test on 3 June addresses, is how much delay exactly exists between the sites through this link.

After a full characterization of the link, the project will do the more fun stuff! Hand-shake from Space, control of a video camera between the two Haptics locations, such that Terry Virts can be like an 'avatar' between us, and finally (hopefully - if we still get the time) we'll carry out a remote stiffness discrimination test. There, Terry will have to probe objects of varying stiffness remotely from space and he'll have to rate their relative stiffnesses. This way, we can find out whether the teleoperation system in itself degrades his difference sensing capability. Hopefully not ! He should be able to sense stiffness differences of at least 25% without a doubt!

Haptics Mission Status

• March 15, 2016: ESA’s Meteron project is looking at a way to not only reduce this time delay but also make the command path robust against signal interruptions that may occur over long distances with multiple relay stations. — Tim Peake: ”On March 17 and April 25, 2016, I will be part of an experiment to drive two different rovers from Europe’s Columbus laboratory. At first glance it might seem easy to operate a rover from space – the hard part would be getting astronauts and the rovers to their destination – but the communications network and robot interface need to be built from scratch and tested to work in microgravity. On March 17, I will be driving Eurobot at ESTEC in The Netherlands in a two-hour experiment. The Space Station flies at around 28 800 km/h and completes a full orbit of Earth every 90 minutes, so throughout the session my commands could be beamed directly to the car-sized rover while at other times the Space Station will be on the other side of the planet – so commands need to be relayed through satellites and multiple control centers. To keep everything going smoothly engineers at ESTEC created a new type of ‘space-internet’ that adapts to the changing connections and network speed. In the experiment, Eurobot will be near a mock-up lunar base that has a problem with its solar array. It is up to me to locate, grab and unjam the solar panels.” 6)

• On August 14, 2015, the Haptics-2 project acquired good science results for the second time. It had a very high interaction with crew (astronaut Kimiya Jui) that resulted in a very efficient science protocol conduct, collected a full amount of 9 science runs and performed a detailed Ku-forward link characterization. 7)

Here a brief wrap-up of what was actually completed today:

- One full run of Protocol 1b (and one partial one), checking out the Ku-forward communication link on all ports and quantifying their delay and jitter. Three channels are used, for telepresence, housekeeping data and for video.

- Seven science data acquisitions for different controller settings to quantify the telepresence control. This was done by teleoperated stiffness discrimination tasks performed by Kimiya. We can state that these runs completed a full conduct of Haptics-2 Protocol 2b. Due to some mishaps in the first 3 runs related with the Ku-forward link connectivity we might, however, not be able to use the first three measurements.

- A time analysis and time-sync between space and ground NTP servers. Acquired on-board GPS time and synced with ground GPS-time (with UTC leap seconds) for a detailed characterization of on-ground and on-board NTP servers. This will be important for Interact, in order to sync all telemetry (space and ground) to the same time base.

- A first Space-to-Ground crew guided procedure while performing a telepresence control experiment.

- A pre-verification of our four-channel bilateral control architecture that is robust to 850 milliseconds of round-trip time delay. 8)

- A verification of the model-mediated bilateral control architecture that is robust to any time-delay. The data post-processing will reveal whether the operator in space was able to identify the right order of stiffness of objects presented to him in random order.

• On June 3, 2015, the Telerobotics Lab performed the first-ever demonstration of space-to-ground remote control with live video and force feedback when NASA astronaut Terry Virts orbiting Earth on the ISS (International Space Station) shook hands with ESA telerobotics specialist André Schiele in the Netherlands. 9) 10)

- Terry was testing a joystick that allows astronauts in space to ‘feel’ objects from hundreds of kilometers away. The joystick is a twin of the one on Earth and moving either makes its copy move in the same way.

- The joystick provides feedback so both users can feel the force of the other pushing or pulling. Earlier this year, NASA astronaut Butch Wilmore was the first to test the joystick in space but without a connection.

- This historic first ‘handshake’ from space is part of the Lab’s Haptics-2 experiment, testing systems to transmit the human sense of touch to (and from) space for advanced robot control.

Legend to Figure 5: First the ISS joystick was moved, then the slaved joystick on the ground. Then Andre shook the ground joystick in turn, felt by Terry in orbit. This first 'handshake with space' took place as part of the Lab's Haptics-2 experiment, harnessing advanced telerobotics technology for the control of space robot systems. ESA's Telerobotics and Haptics Laboratory is based at ESA/ESTEC in Noordwijk, the Netherlands.

The Haptics-2 experiment on June 3, 2015 verified the communications network, the control technology and the software behind the connection. Each signal from Terry to André had to travel from the International Space Station to another satellite (TDRS) some 36 000 km above Earth, through Houston mission control in USA and across the Atlantic Ocean to ESA/ESTEC in the Netherlands, taking up to 0.8 seconds in total both ways.

As the Space Station travels at 28 800 km/h, the time for each signal to reach its destination changes continuously, but the system automatically adjusts to varying time delays. In addition to the joystick, Terry had an extra screen with realtime video from the ground and augmented reality added an arrow to indicate the direction and amount of force.

Legend to Figure 6: In the first-ever demonstration of space-to-ground remote control with live video and force feedback, NASA astronaut Terry Virts orbiting Earth on the International Space Station shook hands with ESA telerobotics specialist André Schiele in the Netherlands. - Terry was testing a joystick that allows astronauts in space to ‘feel’ objects from hundreds of kilometers away. The joystick is a twin of the one on Earth and moving either makes its copy move in the same way.

• January 8, 2015: NASA Astronaut Butch Wilmore completed the first full Haptics-1 data collection onboard the ISS. Now, all seven protocols of Haptics-1 have been recorded in both wall-mount and body-mount sessions! An exciting data-mining and analysis is coming up for the Telerobotics & Haptics Lab in the coming days and weeks. It will reveal in great detail which performances human crew can achieve with haptic force-feedback devices in space and which differences exist between the performance in space and on ground. 12)

- In the wall-mount configuration the joystick is fixed to the Rack's of the ISS and the astronaut stabilizes himself with the handrails (with the other hand) and with his feet.

- In the vest mount configuration, the joystick is fixed to the crew-member on his own chest. The team believes that this force-closure on the human body might improve the then available hand-eye coordination of the crew member during the tests.

• January 5, 2015, Haptics-1 experiment: In a milestone for space robotics, the International Space Station has hosted the first full run of ESA’s experiment with a force-reflecting joystick. NASA crew member Barry Wilmore operated the force-feedback joystick to gather information on physiological factors such as sensitivity of feeling and perception limits. He finished a first run on New Year’s Eve (Dec. 31, 2014). 13)

- Operating on a "force feedback" mechanism, the Telerobotics and Haptics Lab's Haptics-1 hardware is essentially a force-reflecting joystick, which mimics the functions of a video gaming joystick. Referring to the sensation that you feel in your fingertips and hands, "force feedback" helps humans carry out tasks such as typing, or tying shoelaces without looking down.

- The deceptively simple-looking lever is connected to a servomotor that can withstand any force an astronaut operator might unleash on it, while generating forces that the astronaut will feel in turn – just like a standard video gaming joystick as a player encounters an in-game obstacle. The joystick measures such forces at a very high resolution.

- Harnessing that sensation for robotics would extend the human sense of touch to space or other remote areas, making robotic control much more natural and easy. Ultimately, robots could work thousands or tens of thousands of kilometers away, yet perform tasks of equal complexity to those a human operator could manage with objects immediately at hand.

- To stop the weightless users being pushed around by the force, the ‘Haptics-1’ experiment can be mounted either to a body harness or be fixed to the Station wall.

Interact Space Experiment

Background

In early September of 2015, Danish astronaut Andreas Mogensen will perform a groundbreaking space experiment called Interact, developed by ESA in close collaboration with the TU Delft Robotics Institute. During the 2015 ESA Short Duration Mission, Mogensen will take control of the Interact Centaur rover on Earth from the International Space Station in real-time with force-feedback. 14) 15)

The Mission

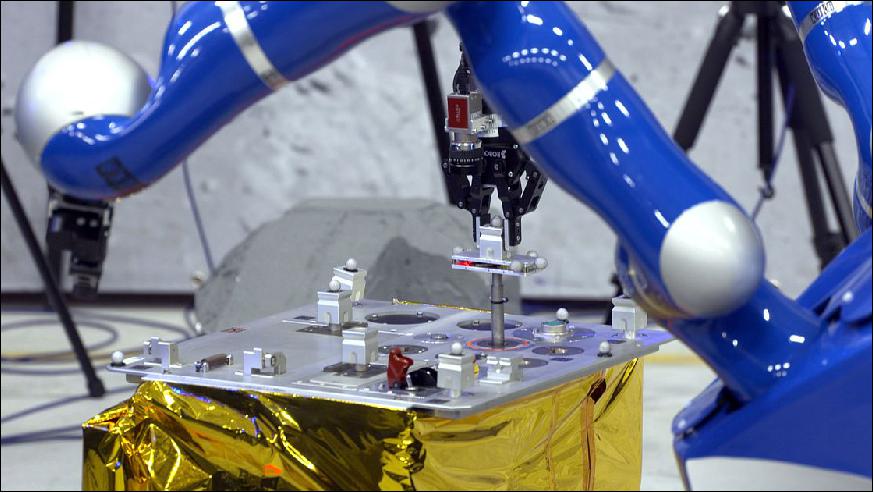

The Interact experiment, conceived and implemented by the ESA Telerobotics & Haptics Laboratory, will be the first demonstration of teleoperation of a rover from space to ground in which during part of the experiment, the operator will receive force-feedback during control. The task set up for the astronaut is to maneuver the rover located at ESA’s ESTEC technical center in Noordwijk through a special obstacle course, to locate a mechanical task board and to perform a mechanical assembly task. Once the task board is located and approached, the astronaut will use a specially designed haptic joystick in space to take control of one of the Centaur’s robotic arms on Earth. With the arm he will execute a “peg-in-hole” assembly task to demonstrate the ability to perform connector mating through teleoperation with tight mechanical tolerances of far below one millimeter. The haptic feedback allows the astronaut to actually feel whether the connector is correctly inserted and, if necessary to fine-tune the insertion angle & alignment. The complete operation is performed from on-board the International Space Station, at approximately 400 km altitude, using a data connection via a geosynchronous satellite constellation at 36.000 km altitude. The communication between the haptic joystick and the ground system is bi-directional, where both systems are essentially coupled. This so-called bi-lateral system is particularly sensitive to time delay, which can cause instability. The satellite connection, called the Tracking and Data Relay Satellite System (TDRSS), results in communication time delays as large as 0.8 seconds, which makes this experiment especially challenging. ESA copes with these challenges through specialized control algorithms developed at ESA’s Telerobotics Laboratory, through augmented graphical user interfaces with predictive displays and with ‘force sensitive’ robotic control algorithms on ground. These ESA technologies allow the operator to work in real-time from space on a planetary surface. It is as if the astronaut could extend his arm from space to ground.

With Interact, ESA aims to present and validate a future where humans and robots explore space together. Robots will provide their operators much wider sensory feedback over much greater distances than what can be done by terrestrial robots today. Not only in space, but also on Earth, remote controlled robotics will prove highly enabling in dangerous and inaccessible environments. They can be used in arctic conditions, in the deep sea or for robust intervention in nuclear disaster sites.

We can expect that future human exploration missions to the Moon and Mars will benefit from such advanced human-robotic operations. ESA's research in telerobotic technologies and advanced crew operations from orbit will play a key role in these coming adventures. The ESA Telerobotics and Haptics Laboratory, along with ESA's Technical and Space Exploration Directorate, are dedicated to taking the next big steps in human-robot collaboration in space.

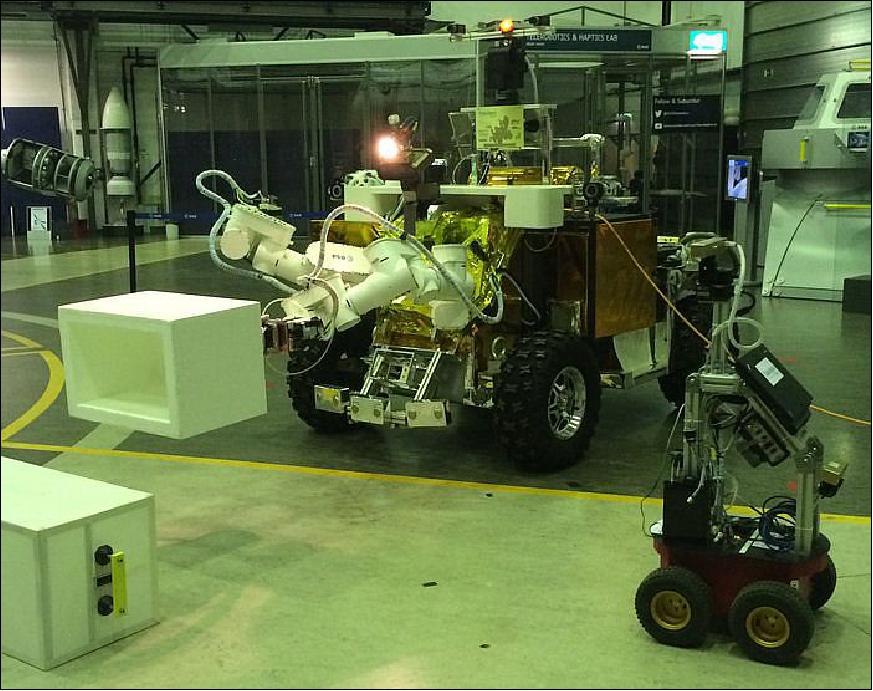

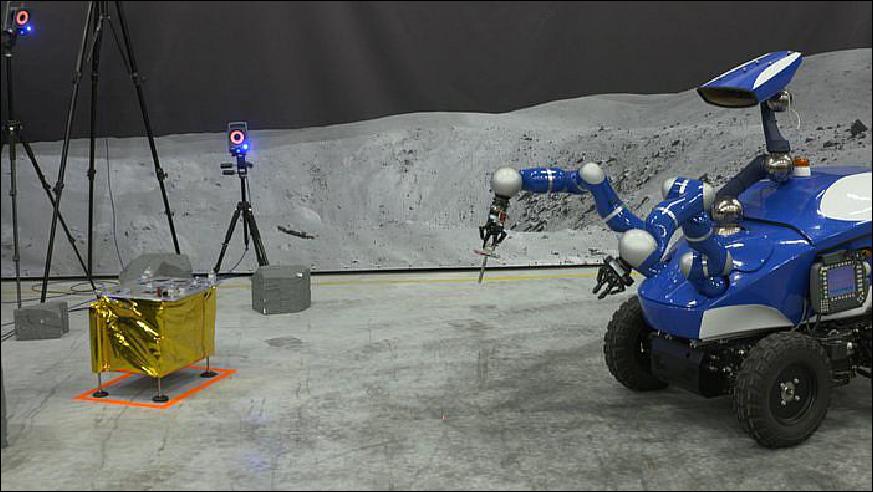

Interact Centaur

The mobile robotic platform, called the Interact Centaur, was specifically designed to be able to maneuver through rough terrain at high speeds and to have the dexterity to perform very delicate and precise manipulation tasks through remote control. The Interact Centaur rover was designed and built by the Lab team in collaboration with graduate students from Delft University of Technology, the Netherlands.The custom vehicle design was brought from concept to reality in little over a year.

COMPUTING

The robot makes use of seven high performance computers running software that has been programmed in a highly modular, model-based and distributed way.

ROBOTIC ARMS

Two KUKA (KUKA Laboratories GmbH, Augsburg, Germany) lightweight robotic arms on the front of the rover allow the operator to perform very precise manipulation tasks. The arms can be ‘soft controlled’ to safely interact with humans or delicate structures and can be programmed to be compliant (like a spring and or damper) when they hit an object. The arms are equipped with highly ‘force sensitive’ sensors and can flex and adapt in a similar manner to human arms during remote control. This allows to tightly couple those arms to an operator located far away by means of haptic (i.e. force-transmitting) interfaces. Their operation during the Interact experiment is very intuitive, allowing delicate and dexterous remote operations to take place across very long distances with the finest amount of force feedback to the operator despite the communication time delay.

ROVER MOBILE PLATFORM

The drivetrain and wheels for the Interact Centaur are a customized version of the remote controlled platform manufactured by AMBOT. This battery-powered, four-wheel-drive, four-wheel steering platform is weatherproof and gives the rover over 8 hours of run-time in challenging terrains.

ROBOTIC PAN-AND-TILT NECK AND HEAD

A robotic 6 degrees of freedom Neck gives the cameras in the rover’s head an enormous field of view, good for driving and for close visual inspection tasks.

REAL-TIME CAMERAS

The rover has 4 dedicated real-time streaming cameras that the astronaut can use during the mission. A head pan-tilt camera that will allow general contextual overview of the situation during driving and exploration of the environment. A tool camera mounted on the right robotic arm for vision during precise tool manipulation. Two hazard cameras (front and back) to view the near proximity area otherwise occluded by the chassis during driving.

EXTERIOR DESIGN

A custom-made exterior protects all delicate mechatronic and computing hardware from dust and ensures a good thermal design.

AUGMENTED REALITY

To provide extra support to the astronaut while driving the rover, an augmented reality (AR) overlay was developed. This allows for virtual markers such as predicted position markers to be displayed on top of the camera feed.

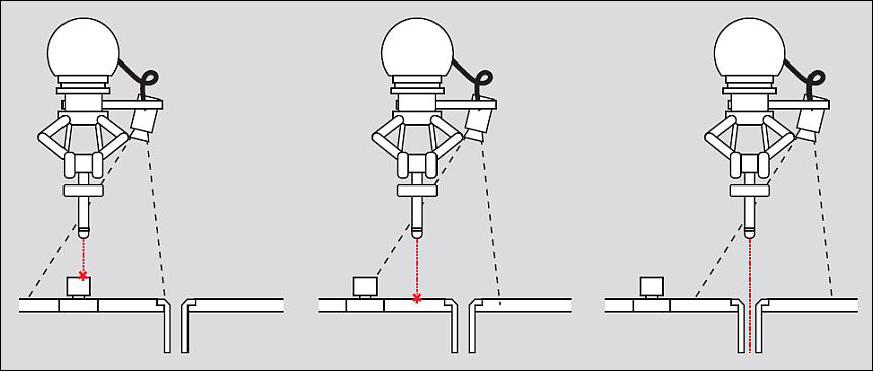

LASER GUIDANCE

To visually support the astronaut when performing the mechanical alignment during the peg-in-hole assembly task, a laser has been embedded within the tool. When hovering over the hole, the laser will be invisible indicating that the connection can be attempted. The Laser creates an artificial depth impression by a dedicated depth-cue. This allows executing such complex 3D tasks without requiring a dedicated stereo 3D video system, which would consume excessive data bandwidth.

Space-to-Ground Communications

As a complicating factor, the signals between the astronaut and the robot must travel via a dedicated and highly complex network of satellites in geostationary orbit. The signals will travel from the International Space Station via NASA’s TDRSS to ground facilities in the U.S. From there, they cross the Atlantic Ocean to the ESA facilities in Noordwijk, the Netherlands. Forces between the robot and its environment, as well as video and status data, travels back to the graphical user interface and the haptic joystick. In this round-trip, all signals cover a distance of nearly 90,000 km. The resulting round trip time delay approaches one second in length.

ESA developed a model-mediated control approach that allows to perform force-feedback between distributed systems up to multiple seconds of time delay, without a noticeable reduction of performance, compared with directly coupled systems. Despite the fact that this smart software and control methods enable the astronaut to perform such tasks on Earth, research suggests that humans can only handle signal transmission time delays of up to about three seconds for control tasks that require hand-eye coordination. In theory this would allow haptic control from Earth to robotic systems on as far away as the surface of our Moon.

HAPTICS-1 JOYSTICK

On-board the ISS, the astronaut will re-use equipment from the previous Telerobotics & Haptics Lab experiments, called Haptics-1 and Haptics-2. For these experiments a tablet PC and a small force reflective joystick were flown to the ISS with the goal to evaluate human haptic perception in space and to validate real-time telerobotic operations from space to ground. During Haptics-1, on the 30th of December 2014, haptics was first used in the microgravity environment of the ISS. During Haptics-2, on June 3rd (21:00 CEST) 2015, for the first time in history, a handshake with force-feedback was performed between two humans, one located in space and on ground (Figure 4).

Interact Flight Experiment

"Interact" is a new space technology demonstration experiment that will be conducted from on-board the International Space Station in 2015. In Interact, astronauts will control an advanced robotic system on Earth, for the first time, with force reflection and in real-time. Astronauts will execute a sequence of remotely operated tasks with the Interact Robot on Earth, located in a real-life outdoor environment. 16) 17)

The Interact Robot (Interact Centaur) consists of a 4 drive 4 steer mobile platform, two seven degrees of freedom robot arms with grippers and of a camera and head system allowing to perceive the environment optical information. On-board, the crew will re-use the equipment provided by Haptics-1 experiment, i.e. a tablet PC and a small force reflective Joystick.

For the very first time, a real-time data-link will exist between a payload on ISS and a robotic system on Earth, simulating e.g. Mars orbiter to surface robotic remote operations as closely as possible.

The Interact experiment is headed by ESA's Telerobotics & Haptics Laboratory and is implemented in collaboration with TU Delft Robotics Institute (Interactive Robotics Theme) as well as with the support of the Delft DREAM hall.

Status of the Interact Flight Experiment

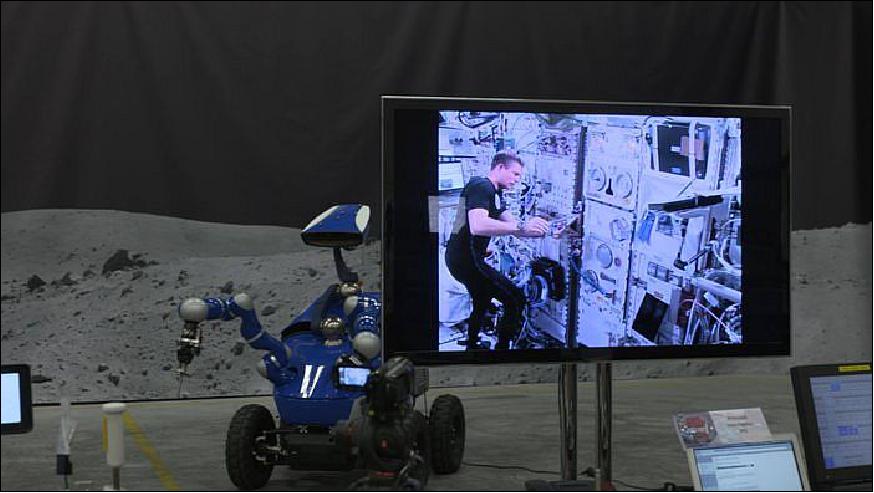

• Sept. 11, 2015: ESA astronaut Andreas Mogensen is proving to be an expert space driver after commanding two rovers from space this week. As part of ESA’s METERON project, Andreas drove a second, car-sized rover from the International Space Station to repair a mockup lunar base in the Netherlands (Figure 15). 18)

- Andreas directly controlled the Eurobot rover in a simulated troubleshooting Moon scenario. A second rover was controlled by ESA’s Columbus Control Center at DLR in Oberpfaffenhofen, Germany, allowing Andreas to focus on Eurobot and intervene if necessary.

- The new user interface for operating rovers from space ran perfectly as they worked in harmony at close quarters without any problems. The experiment went so well that it was completed in one continuous session rather than over the planned three sessions on two days.

- “This experiment demonstrated that we have the means to operate lunar robots from a spacecraft orbiting the Moon, a topic ESA is studying at the moment,” says METERON system engineer Jessica Grenouilleau. Landing humans on a distant object is one thing, but they will also need the fuel and equipment to work and return to Earth when done. Sending robots to scout landing sites and prepare habitats for humans is more efficient and safer, especially if the robots are controlled by astronauts who can react and adapt to situations better than computer minds.

- METERON is developing the communication networks, interfaces and hardware to operate robots from a distance in space. The Space Station is being used as testbed, with astronauts controlling rovers on Earth. The demonstration showed that robots can perform valuable tasks and two can collaborate efficiently, even if they are controlled from long distances apart.

- The experiment proved the user interface works well and that ESA’s ‘space internet’ can stream five video signals to the Station – orbiting at 28 800 km/h some 400 km high – without significant delays and in good quality. If the link is lost for a moment, which often happens in space operations, the network adapts without a problem.

- The user interface was developed for ESA by a young team from Thales Alenia Space in Italy, working against tight deadlines to be ready in time for Andreas’s mission.

Legend to Figure 15: One of the tasks was to lift up insulation to inspect underneath. The Meteron engineers had placed the winning drawing of a children’s competition in Denmark to reveal the winner.

Note: The stay of Andreas was scheduled to last only for 10 days. In the morning of September 12, 2015 at 00:51 GMT, Andreas Mogensen, Soyuz spacecraft commander Gennady Padalka and Kazakh cosmonaut Aidyn Aimbetov landed in the steppe of Kazakhstan, marking the end of their missions to the International Space Station. - The trio undocked from the orbiting complex on 11 September at 21:29 GMT in an older Soyuz spacecraft, leaving the new vessel they arrived in for the Station crew. 21)

• Sept. 8, 2015: Putting a round peg into a round hole is not hard for someone standing next to it. But yesterday (Sept. 7), ESA astronaut Andreas Mogensen did this while orbiting 400 km up aboard the ISS (International Space Station), remotely operating a rover and its robotic arm on the ground. Andreas used a force-feedback control system (Haptics-1) developed at ESA, letting him feel for himself whenever the rover’s flexible arm met resistance. 22)

- These tactile sensations were essential for the success of the experiment, which involved placing a metal peg into a round hole in a ‘task board’ that offered < 0.15 mm of clearance. The peg needed to be inserted 4 cm into the hole to make an electrical connection.

- “We are very happy with today’s results,” said André Schiele, leading the experiment and ESA’s Telerobotics and Haptics Laboratory. Andreas managed two complete drive, approach, park and peg-in-hole insertions, demonstrating precision force-feedback from orbit for the very first time in the history of spaceflight. “He had never operated the rover before but its controls turned out to be very intuitive. Andreas took 45 minutes to reach the task board and then insert the pin on his first attempt, and less than 10 minutes on his follow-up attempt, showing a very steep learning curve.”

- The experiment took place at ESA/ESTEC in Noordwijk, the Netherlands, watched by a contingent of media as well as eager telerobotics engineers and center personnel.

- The real challenge was achieving meaningful force feedback despite the distance the signals had to travel: from the Station, hurtling around Earth at 8 km/s, up to satellites almost 36 000 km high and then down to a US ground station in New Mexico, via NASA Houston and then through a transatlantic cable to ESTEC – and back. It added up to a round-trip of more than 144 000 km. The inevitable two-way time delay approaches one second in length, but the team used sophisticated software based on a dedicated control method termed ‘model mediated control’ to help compensate for this lag, incorporating sophisticated models to prevent the operator and arm from going out of sync.

- With time remaining, Andreas used a force-feedback joystick to differentiate between the stiffness of different springs, helping to measure the sensitivity of users up in orbit to very small degrees of feedback.

- The test was part of a suite of experiments Andreas is carrying out on his mission to the Space Station. Arriving last Friday (Sept. 4), he is due to return to Earth on Saturday (Sept. 12, 2015).

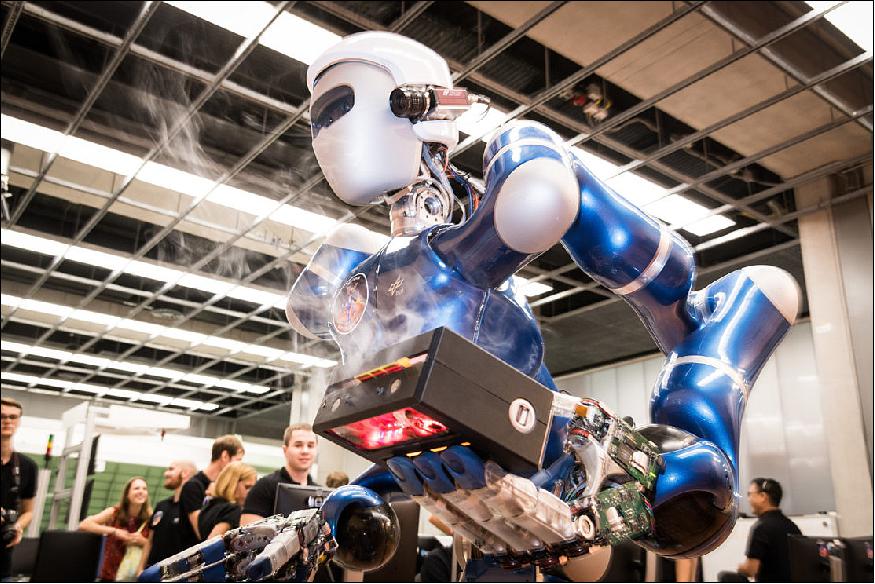

Astronauts Control the DLR Robot Justin from Space in ESA's METERON Experiments

• August 21, 2018: Rollin’ Justin, the humanoid robot developed by the German Aerospace Center, DLR proved to be able to handle it just fine. Last week (17 August) ESA astronaut Alexander Gerst on the ISS conducted the SUPVIS-Justin experiment, part of ESA’s METERON project that aims to demonstrate the technology needed to allow astronauts orbiting other planets to direct robots on the surface. 26)

- Using a basic tablet with a dedicated app, Alexander interacted with Justin by sending a series of commands from the International Space Station. Justin, located at the DLR site in Oberpfaffenhofen, Germany, performed maintenance and assembly tasks for two hours in his simulated Mars environment.

- The demonstration honed in on Justin’s semi-autonomous ability to assess his situation and proceed from there. Alexander in turn had to supervise Justin based on the robot’s feedback. This feature was key in the second half of the experiment, which was designed to test how responsive the telerobotic systems are in unexpected situations. Tasked with retrieving an antenna from the lander and mounting it on a special payload unit, Justin encountered smoke coming from inside the unit. Alexander reacted by asking Justin to investigate further.

- Advancements in robotics and artificial intelligence means we can better use robots to fulfil tasks normally left to humans, especially in more dangerous situations like inhospitable planetary surfaces. With the help of robots, humans can more safely and efficiently build habitats and scout the surface of other planets in the future.

- The experiment was first performed by ESA astronaut Paolo Nespoli during his mission in 2017, along with NASA astronauts Randy Bresnik and Jack Fischer. It quickly became a hit among the Space Station’s crew members. NASA astronaut Scott Tingle performed the second experiment last March. European researchers will work with the feedback from astronauts to improve the interface.

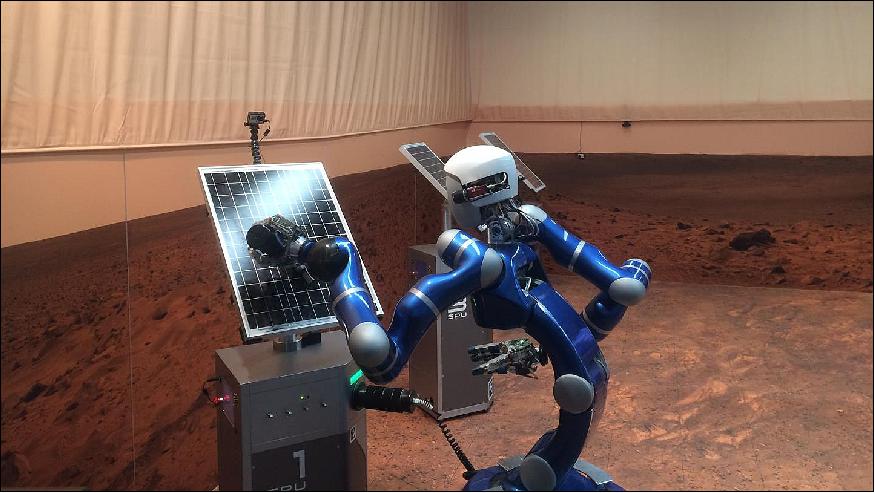

• On 2 March 2018, engineers at the DLR/IRM (Institute of Robotics and Mechatronics) in Oberpfaffenhofen set up the robot called Justin in a simulated Martian environment. Justin was given a simulated task to carry out, with as few instructions as necessary. The maintenance of solar panels was the chosen task, since they’re common on landers and rovers, and since Mars can get kind of dusty. 27)

Experiment Objectives

- Test run at the DLR site in Oberpfaffenhofen: artificial intelligence for the cooperation of robot and astronaut.

- Experiment with more challenging tasks for the human-machine team in preparation for planetary exploration missions.

- Focus: Space, Artificial Intelligence, Robotics, Human Spaceflight.

“The robot is clever, but the astronaut is always in control," says Neal Lii, the DLR project manager. In August 2017, the experiment was successfully carried out for the first time as part of the METERON (Multi-Purpose End-to-End Robotic Operation Network) project, together with ESA (European Space Agency). In the second test run, the tasks have now become more demanding for both the robot and the astronaut.

Robot with Artificial Intelligence

For the experiment, the robot Justin was relocated to Mars as a worker – visually at least – in order to inspect and maintain solar panels as autonomously as possible – task by task – and to provide the orbiting astronaut with constant feedback for the next stages of work. "Artificial intelligence allows the robot to perform many tasks independently, making us less susceptible to communication delays that would make continuous control more difficult at such a great distance," Lii explains. "And we also reduce the workload of the astronaut, who can transfer tasks to the robot." To do this, however, astronauts and robots must cooperate seamlessly and also complement one another.

Human-Machine Team

To begin with, scientists made the human and machine team handle a few standard tasks, which had already been practised in advance on the ground and also performed by Justin from the ISS. But subsequent assignments went well beyond mechanical tasks. The solar panels were covered with dust – this would be a problem for a planetary mission on Mars, for example, which astronauts and robots would have to overcome. The panels also were not optimally directed towards the sunlight. When operating solar panels for a Martian colony, this would soon result in the energy supply becoming weaker and weaker.

NASA astronaut Scott Tingle onboard the ISS, who was viewing the working environment on the Red Planet through Justin's eyes on his tablet, quickly realized that Justin needed to clean the panels and remove the dust. And he also had to realign the solar components. To do this, he could choose from a range of abstract commands on the tablet. “Our team closely observed how the astronaut accomplished these tasks, without being aware of these problems in advance and without any knowledge of the robot's new capabilities," says DLR engineer Daniel Leidner. The new tasks also posed a challenge for Justin. Instead of simply reporting whether he had fulfilled a requirement or not, as in the initial test run in August 2017, this time he and his operator had to ‘estimate’ the extent to which he had cleaned the panels. The team on the ground monitored his choices.

In the next series of experiments in the summer of 2018, the German ESA astronaut Alexander Gerst will take command of Justin aboard the ISS — and the tasks will again become slightly more complicated than before because Justin will have to select a component on behalf of the astronaut and install it on the solar panels.

The Astronaut's Partner

"This is a significant step closer to a manned planetary mission with robotic support," says Alin Albu-Schäffer, head of DLR/IRM. In such a future, an astronaut would orbit a celestial body – from where he or she would command and control a team of robots fitted with artificial intelligence on its surface. "The astronaut would therefore not be exposed to the risk of landing, and we could use more robotic assistants to build and maintain infrastructure, for example, with limited human resources." In this scenario, the robot would no longer simply be the extended arm of the astronaut: "It would be more like a partner on the ground."

References

1) “METERON Project,” ESA, 26 April 2017, URL: https://www.esa.int/Our_Activities

/Space_Engineering_Technology/Automation_and_Robotics/METERON_Project

2) Neal Y. Lii, Daniel Leidner, André Schiele, Peter Birkenkampf, Ralph Bayer, Benedikt Pleintinger, Andreas Meissner, Andreas Balzer, “Simulating an extraterrestrial environment for robotic space exploration: The METERON-Supvis-Justin telerobotic experiment and the SOLEX proving ground,” URL: http://meteron.dlr.de/wp-content/uploads/2015/05/P10_Lii.pdf

3) ”Controlling robots across oceans and space – no magic required,” ESA, 2 October 2019, URL: http://www.esa.int/Our_Activities/Human_and_Robotic_Exploration

/Exploration/Controlling_robots_across_oceans_and_space_no_magic_required

4) “Haptics-1 ... on it's way to ISS on ATV-5,” Telerobotics & Haptics Lab, July 29, 2014, URL: http://www.esa-telerobotics.net/news/13/67/Haptics-1-on-it-s-way-to-ISS-on-ATV5

5) “Experiment setup,” ESA, Feb. 2, 2014, URL: http://www.esa.int/spaceinimages/Images/2014/02/Experiment_setup

6) ”Space-to-ground remote,” Tim Peake's Principia Blog, March 15, 2016, URL: http://blogs.esa.int

/tim-peake/2016/03/15/space-to-ground-remote/

7) André Schiele, “Post Experiment up-date 14/08/2015, 15:30, METERON Project,” Live Blog, August 14, 2015, URL: http://esa-telerobotics.net/meteron/flight-experiments/haptics-2/live-blog-13th-august-2015

8) J. Rebelo,A. Schiele“Time Domain Passivity Controller for 4-Channel Time-Delay Bilateral Teleoperation,” Haptics, IEEE Transactions on Haptics, Volume 8 , Issue 1, Oct. 16, 2014, pp: 79-89, ISSN : 1939-1412, Date of current version: 18 March 2015, DOI: 10.1109/TOH.2014.2363466

9) “Historic Handshake between Space and Earth,” ESA, June 3, 2015, URL: http://www.esa.int/Our_Activities/Human_Spaceflight/Historic_handshake_between_space_and_Earth

10) “Haptics-2 — First-time teleoperation with force-feedback from Space done on 03. June 2015,” ESA, June 3, 2015, URL: http://www.esa-telerobotics.net/gallery/Meteron-Experiments/Haptics-2/79

11) “ First handshake with space,” ESA, June 3, 2015, URL: http://www.esa.int/spaceinimages/Images/2015/06/First_handshake_with_space

12) “First Haptics-1 dataset collection completed,” Telerobotics & Haptics Lab, January 8, 2015, URL: http://www.esa-telerobotics.net/news/17/67/First-Haptics-1-dataset-collection-completed

13) “Astronaut feels the force,” ESA, January 5, 2015, URL: http://www.esa.int/Our_Activities/Space_Engineering_Technology/Astronaut_feels_the_force

14) “Interact Space Experiment- Online Fact Sheet,” ESA, URL: http://esa-telerobotics.net

/uploads/documents/Interact%20Brochure%20-%20Online.pdf

15) “Astronaut Andreas to try sub-millimeter precision task on Earth from Orbit,” ESA, Aug.27, 2015, URL: http://www.esa.int/Our_Activities/Space_Engineering_Technology

/Astronaut_Andreas_to_try_sub-millimetre_precision_task_on_Earth_from_orbit

16) André Schiele, “Interact Flight Experiments,” ESA, Sept. 2015, URL: http://esa-telerobotics.net/meteron/flight-experiments/interact

17) “Telerobotics head introduces Interact rover,” ESA, Sept. 1, 2015, URL: http://www.esa.int/spaceinvideos/Videos/2015/09/Telerobotics_head_introduces_Interact_rover

18) “Supervising two rovers from space,” ESA, Sept. 11, 2015, URL: http://www.esa.int/Our_Activities/Human_Spaceflight/iriss/Supervising_two_rovers_from_space

19) http://www.esa.int/spaceinimages/Images/2015/09/Eurobot_rover_with_surveyor

20) “Repairing Lunar Lander reveals a surprise,” ESA, Sept. 11, 2015, URL: http://www.esa.int/spaceinimages/Images/2015/09/Repairing_Lunar_Lander_reveals_a_surprise

21) “Andeas Mogensen lands after a busy mission on Space Station,” ESA, Sept. 12, 2015, URL: http://www.esa.int/Our_Activities/Human_Spaceflight/iriss

/Andreas_Mogensen_lands_after_a_busy_mission_on_Space_Station

22) “Slam dunk for Andreas in space controlling rover on ground,” ESA, Sept. 8, 2015, URL: http://www.esa.int/Our_Activities/Space_Engineering_Technology

/Slam_dunk_for_Andreas_in_space_controlling_rover_on_ground

23) “Andreas controlling rover,” ESA, Sept. 8, 2015, URL: http://www.esa.int/spaceinimages/Images/2015/09/Andreas_controlling_rover

24) “Rover approaching task board,” ESA, Sept. 8, 2015, URL: http://www.esa.int/spaceinimages/Images/2015/09/Rover_approaching_task_board

25) “Rover inserting peg,” ESA, Sept. 8, 2015, URL: http://www.esa.int/spaceinimages/Images/2015/09/Rover_inserting_peg

26) ”It is lit,” ESA, Human and robotic exploration image of the week: Justin the humanoid robot during a space-to-ground experiment , 21 August 2018, URL: http://m.esa.int/spaceinimages/Images/2018/08/It_is_lit

27) ”Collaboration between space and Earth -Astronaut Scott Tingle controls DLR robot Justin from space,” DLR, 2 March 2018, URL: http://www.dlr.de/dlr/en/desktopdefault.aspx

/tabid-10081/151_read-26288/year-all/#/gallery/29934

The information compiled and edited in this article was provided by Herbert J. Kramer from his documentation of: ”Observation of the Earth and Its Environment: Survey of Missions and Sensors” (Springer Verlag) as well as many other sources after the publication of the 4th edition in 2002. - Comments and corrections to this article are always welcome for further updates (eoportal@symbios.space).